OR

What if machines became smarter than humans and started making more intelligent machines on their own? What if they stop working for humans and instead start employing them?

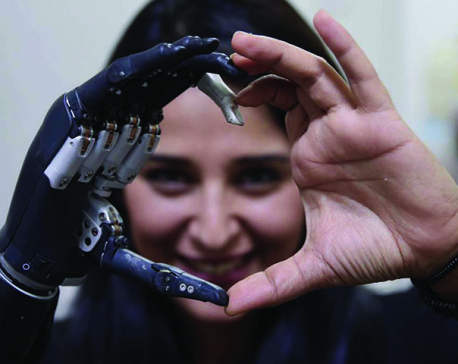

On January 2, the BBC reported about a study regarding the use of artificial intelligence in making medical diagnosis. The tests carried out during the study showed that the AI based computer system outperformed human doctors in diagnosing breast cancer. The computer algorithm outperformed radiologists in reading mammograms and diagnosing correctly.

The computer model was designed jointly by Google Health and Imperial College London. It was trained on X-ray images of more than 29000 women. Like human intelligence, AI is also based on rigorous training of the algorithm or computer model with training data. Suppose a person has seen only green apple all his life. If that person sees a red apple for the first time, it would be difficult to decide whether it was an apple or something else. The person would need a clue that it is also an apple and then update the information in his or her brain. Subsequently, the red apple would also be recognized as apple. Hence, the larger the reference or training data set, the better would be the accuracy of decision. In the above computer model examining x-rays, accuracy increases with increase in number of X-ray images used for training. Here, the idea is to input the images of both cancerous and cancer-free mammograms and train the system to create a knowledge base about what is cancerous and what is not. Computer based artificial intelligence is, simply put, a matter of training and updating the algorithm as well as its knowledge base.

It is not only sophisticated mammogram reading programs that are intelligent. A phone camera predicting the age of the person being photographed, the Facebook page suggesting names of persons in a group photo, a Google search augmented by sponsored links on the searched item, a Youtube page suggesting videos based on the viewing history of the user, pharmaceutical companies developing personalized pills rather than common dosages etc are examples of using computerized algorithms to provide accurate decisions based on the study of a large dataset. The dataset can continuously grow as the system continues to work, thereby gaining more ‘experience’ leading to better decisions. Like us, they learn by doing, gaining experience and doing even better.

Intelligence and humanity

Since the time immemorial, intelligence has been the domain of humans. Intelligence, though defined in various ways, means an ability to perceive and gather information so as to apply later in the form of logic or experience.

Among the billions of living organisms that populate the only habitable planet ever known, it is only humans that have shown perceptible capability of learning and adapting. While some lower level of intelligence has been observed in a few other animals such as apes, dogs and dolphins, the level of sophistication of retention and processing of information in those animals is not comparable to humans. These animals also do not have perceptible means to show whatever little intelligence they have. Only humans are intelligent enough to explore about intelligence and even think about infusing intelligence into machines.

At the core of the human intelligence is the ability to perceive, retain and process information to develop logic and a set of rules for subsequent decision making. If a person touches fire for the first time and gets burnt, the related information such as the color, texture and pattern of the fire, the experience of burning and the nature of the incident that causes burn, such as too much proximity to the fire, are stored in the person’s brain. That information is then used to process subsequent encounters with the fire and the person refrains from touching it. But what about invisible or different colored flame? The information retained earlier may not be able to prevent burns. In such cases, the human brain augments the already existing information and adds the new perceptions to the reference knowledge base. Once the human brain is ‘trained’ about the new type of fire, the subsequent encounters with the new type shall be handled with caution and care.

Thus human intelligence is a mechanism of learning patterns and incidents to correlate them with similar incidences in the future and make decisions. A combination of learning and recognizing patterns, developing logic, making decisions, re-learning and augmenting the knowledge base continues. The cycle of learning, adapting, updating and retaining.

Humans to Machines

As humans became more aware of their surroundings and became cleverer, they became more inquisitive. They were good at ‘learning’ and adapting. They were ‘intelligent’. And they could use that intelligence to be even more intelligent. Among other things, they wanted to know more about their own intelligence and cognitive abilities. As people knew more about their own intelligence, they wanted to emulate that elsewhere. They started exploring opportunities to re-create that intelligence in entities other than themselves. Humans have limited capability to alter the cognitive capability of animals because animals are born with certain ‘basic design’ and a level of cognitive ability that cannot be stretched as they are ‘hard-coded’ by nature. Hence, for emulating intelligence outside the human race, the obvious arena of exploration was the world of machines. This is because machines are built by humans and hence humans have total control over their design and functionality.

First came the machines that could receive, process, store and disseminate information. Also called computers, these machines were precise and accurate executors of pre-set routines or commands. Those commands were already put in by the humans who wrote them. Though very accurate to execute already defined sequences, they were not able to ‘learn’ them. Learning involves updating the existing rule set based on new observations. That ability to update the knowledge base was not there in computers. Even in machines like Deep Blue that defeated the world’s best player in chess, the premier brain game, such ability was in relatively primitive stage. These supercomputers could crunch huge amount of data based on existing sequence of instructions. They were early examples of super-efficient computing and some level of AI but not as feature-rich as their modern counterparts. They could not update the instructions or logic based on the observed data or complex patterns.

With extraordinary pace of development in the arena of computing devices and interconnectivity among them, the next frontier was to infuse human-like intelligence into the devices. This involved creating a complex mechanism of loosely connected data structures or elements called neural networks that could be used to recognize patterns, internalize them and update the pattern knowledge base every time they encountered a new sample. Neural networks are the digital equivalent of neurons, hundreds of billions of which form the information processing, cognitive and memory structure of the human brain. Whenever a data signal has to be processed, these neurons get activated and interconnected to represent an information, logic or memory. Artificial Intelligence elements also consist of the neuron-like elements that get activated and aligned to form a memory or data processing element. The difference from traditional computing is that these neural network elements are generic and can align themselves to represent a distinct information set or information processing logic or pattern database. Pattern recognition and reorientation of the artificial neural network was at the heart of the evolution of machine learning or AI.

Machines for Tasks

AI is not limited to pinpointing cancerous or likely cancerous images from the observed mammograms. They can be and have been used in many different purposes. Like a sniffing dog or human being specifically trained for an expert task, an AI based algorithm or model designed for a particular task can differ from that developed for another task. For example, an AI model developed for driverless vehicle will be different from that developed for a mammogram diagnosing model or a model to predict stock market prices. They differ in the inputs they take, the outputs they present and the steps they follow to process specific data in their respective field. But fundamentally, they are the same.

They all have to be trained by a large volume of pre-defined dataset. For example a driverless car computer model shall have to be fed a lot of data about roads, traffic, obstacles, traffic rules, speed etc. A stock market predictor shall have to be trained with a lot of stock market data with predefined results and outputs. It is like a child learning to walk, first on flat surface of a room, then outside the room, then up and down the stairs, then on the open area, then across the road, on a slippery slope, on a muddy surface, on a narrow strip etc. The more she is trained, the better she shall be in handling different walkways and paths. The lesser the number of surprises, the lesser the chances of that child stumbling, falling down or getting lost.

The idea and realization of self-learning computer algorithms is not entirely new. There have been niche examples where machine learning was used to train a computer algorithm with training data and then use the trained algorithm to make subsequent decisions.

The basic idea of AI goes back to 1950 when British computer scientist and mathematician Alan Turing first devised the ‘Turing Test’, to differentiate a machine intelligence from human intelligence. Idea of machine intelligence came to the fore, even before wide development and use of computers. The idea was probably also fueled by science fiction envisaging smart and intelligent machines.

Should we worry?

On February 10, CNN reported about Clearview AI, a facial recognition and image matching startup using AI to match facial images with billions of archived images. The CEO Hoan Ton-That was reported to have assured a responsible use of the huge archive and the capabilities of the surveillance and facial recognition system. However, it is a matter of concern for public as well as authorities as serious questions of privacy, persecution and misuse arise. What if the system were used to track people’s activities and peer into their private lives, all without them being aware of it? It can even lead to racial, political and religious profiling of an individual. This is against the spirit of personal freedom, right to privacy and responsible governance. Ton-That has assured that the data would not be misused or placed in rogue hands. But how can a free state or a freedom-loving individual be sure? In an era when privacy is on the decline, people are living increasingly in a glass house and a prospect of an Orwellian society becoming more and more real, such technological advances and data monopolization are a matter of real concern.

As the world sees more of the cases like Cambridge Analytica, Clearview AI and state surveillance, there is a real concern about good and bad uses of AI and other ultra-advanced digital technologies. How can we be sure that thousands of our photos, tweets, Facebook posts, online purchases and friendly chats are not peered into and analyzed to track our activities, see whom we meet and what we do, so that the information could be used for targeted marketing, advertising or even criminal activities such as bullying, blackmailing or other forms of exploitation?

There are a lot of reasons to be pessimistic and worried. Late Stephen Hawking has identified Artificial Intelligence as one of the major existential threats to mankind. Bill Gates and Elon Musk are among many other big names cautioning the humanity about the risks posed by over-intelligent machines. While very smart machines could work side by side with humans in quickly and accurately diagnosing a probable breast cancer or predict a stock market meltdown, what if machines became smarter than humans and started making more intelligent machines on their own? What if they stopped working for humans and started employing them? Could the human race be able to handle such invasion of hyper intelligent machines? Could we outsmart the machines and stop them from annihilating us?

You May Like This

EU to resume negotiations on world's first AI law on Friday

BRUSSELS, Dec 8: The European Union failed to clinch a deal Thursday on a sweeping law on artificial intelligence after... Read More...

New Technology Can Revolutionize Agriculture in Nepal

Artificial intelligence and robotics technology, for example, can assist in lessening the physical work necessary for farming, making it a... Read More...

Joining the technological frontiers

AI applications will eventually be so broad and so embedded in every aspect of our daily lives that they will likely... Read More...

Just In

- NRB to provide collateral-free loans to foreign employment seekers

- NEB to publish Grade 12 results next week

- Body handover begins; Relatives remain dissatisfied with insurance, compensation amount

- NC defers its plan to join Koshi govt

- NRB to review microfinance loan interest rate

- 134 dead in floods and landslides since onset of monsoon this year

- Mahakali Irrigation Project sees only 22 percent physical progress in 18 years

- Singapore now holds world's most powerful passport; Nepal stays at 98th

Leave A Comment