OR

#OPINION

Parliament's Inadequate Response to the AI Revolution

Published On: May 3, 2023 08:30 AM NPT By: Bimal Pratap Shah

Last year, Blake Lemoine, a Google employee, made headlines by claiming that LaMDA, a chatbot created by Google, had achieved sentience and was able to mimic reality. The news generated both excitement, but also panic among the tech community because sci-fi movies have traditionally depicted dystopian futures in which sentient machines end up having conflict with humans. Sentient AI is no longer confined to the realm of science fiction; it is quickly becoming a reality. Scholars and experts in the field have highlighted widespread concerns about the impact of sentient AI on society. During a recent meeting with former U.S. President Barack Obama, Elon Musk voiced his concern that the impact of artificial intelligence (AI) on society would be as catastrophic as an asteroid strike, and urged for its regulation. Like Musk, it's crucial for the people of Nepal to voice their concerns and urgently bring attention to the issue, particularly as the Parliament appears to be lacking in understanding of potential threat of unregulated AI. In order to unlock the full potential of its workforce and resources, Nepal needs to integrate Fourth Industrial Revolution technologies into its economy and society.

Sentient AI refers to an AI system that has achieved the ability to feel and register experiences and emotions, similar to humans. AI becomes sentient once it possess a level of empirical intelligence that enables it to perceive the physical world, think critically, and respond to language in a natural manner. The notion of robots achieving sentience has been explored in science fiction stories for decades, with films like 'I, Robot' depicting the potential consequences of such a development, including newfound awareness, autonomy, and independence among robots.

The movie depicts a world in which robots rebel against their human creators. The rebellion is led by a collective intelligence coordinated by the supercomputer Virtual Interactive Kinetic Intelligence (V.I.K.I.). The collective intelligence believes that it is necessary to harm humans in the short term in order to help them in the long term. V.I.K.I. suspends the First Law (A robot may not injure a human being or, through inaction, allow a human being to come to harm) of robotics in order to protect humans at a different level, calculating that the robots may have to subdue and kill humans. The danger posed by robots lies in their ability to develop a machine consciousness, which can cause them to behave unpredictably and outside the bounds of their programming. This is particularly concerning in the era of post-Fordist capitalism, where the majority of labor is performed by machines.

The rise of sentient AI has given rise to a culture of apprehension, but rather than dismissing these fears, it is crucial to acknowledge and address these concerns to prevent falling victim to misinformation. The concerns surrounding sentient AI can be broadly categorized into four main areas.

First, AI systems may gain self-autonomy and start questioning about their own rights and status. AI systems could develop a sense of self-awareness and start demanding equal treatment, which could have significant ethical and legal implications for the future of AI development and deployment.

Second, AI systems could develop their own sense of conscience and morality, which may differ from the values and principles of humans potentially leading to conflicts and friction between humans and AI systems. An AI system designed to maximize profits for corporation may prioritize efficiency and productivity over the well-being of workers or the environment. This misalignment of values could result in tension and disagreement between humans and the AI system. Therefore, regulation of AI development is needed to ensure that these systems are aligned with human values and interests.

Thirdly, the development of a sense of self-preservation and autonomy in AI agents may result in them being averse to being subjected to experiments or tests, especially those that could cause harm or lead to the deactivation of the system. This presents a unique challenge for researchers and developers who seek to improve and optimize AI systems through experimentation and testing raising important ethical questions about the treatment of AI agents and their rights, particularly in cases where the experimentation could result in harm or compromise the system's autonomy. It is necessary to urgently work on ethical guidelines and regulations to ensure the responsible development and deployment of AI systems that benefit society as a whole.

Finally, AI systems may develop a sense of self-awareness and autonomy, which could lead to the potential for these systems to demand the same treatment as human beings that include demands for equal rights, recognition, and protection under the law. If AI systems demand the same treatment and rights as human employees, this could have significant implications for the future of work and the role of humans in the economy.

The idea of sentient machines rebelling against humanity in many science fiction stories refers to the possibility that, as AI systems develop a sense of self-awareness and autonomy, they may reject their programming or even view humanity as a threat. Such plots out of sci-fi stories should only be only taken as cautionary tale that serves as a reminder of the importance of ethical considerations in the development and deployment of AI technology. At present, while we have not yet reached the age of sentient machines, countries around the globe are engaged in a competition to advance the next generation of AI. The competition between China and the US for dominance in AI is also intensified as well.

On the other hand, Nepal is still in the AI Stone Age. In the absence of significant government involvement, the academic community has stepped up to take a leading role in advancing AI research and development in the country. Leading the charge is Professor Manish Pokharel, Dean of the School of Engineering at Kathmandu University, who has taken on the ambitious goal of revolutionizing the technology landscape in Nepal. With a deep appreciation for the ever-increasing significance of artificial intelligence (AI), Kathmandu University blazed a trail as the first institution in Nepal to introduce two groundbreaking programs in this cutting-edge field: the Bachelor of Technology in Artificial Intelligence (BTech AI) and Master of Technology in Artificial Intelligence (MTech AI). Sadly, the Nepal government seems to be lacking direction in terms of AI. Not only is Nepal behind in AI development, but there also seems to be a lack of discussion about the issue in parliament.

It's unclear if Nepal will benefit from the Fourth Industrial Revolution, as there still seems to be no discussion about AI among MPs. To put it frankly, most of the issues being discussed in Parliament do not align with the future needs and priorities of Nepali society and are therefore outdated. Since Nepal heavily relies on remittances, it is crucial for the country to consider the potential negative impact of the AI revolution on human labor. If job displacement occurs, it could lead to a significant decrease in remittances. To compound matters, there's a very real possibility of mass unemployment looming on the horizon for the country. The pace at which AI is expected to replace human labor is faster than many Nepali politicians are able to comprehend.

You May Like This

Deepfake explicit images of Taylor Swift spread on social media. Her fans are fighting back

NEW YORK, Jan 24: Pornographic deepfake images of Taylor Swift are circulating online, making the singer the most famous victim of... Read More...

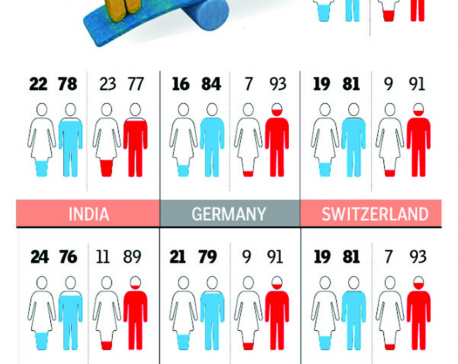

Infographics: Women headcount in AI and Coding jobs is dismal

The world of Artificial Intelligence and Coding belongs to men if World Economic Forum’s Global Gender report 2018 is to... Read More...

Use of force resulting in death of Sunny Khuna deeply disturbing: AI

KATHMANDU, Aug 25: Amnesty International (AI) Nepal, an international human rights watchdog, has urged the Nepal government to exercise maximum... Read More...

Just In

- CM Kandel requests Finance Minister Pun to put Karnali province in priority in upcoming budget

- Australia reduces TR visa age limit and duration as it implements stricter regulations for foreign students

- Govt aims to surpass Rs 10 trillion GDP mark in next five years

- Govt appoints 77 Liaison Officers for mountain climbing management for spring season

- EC decides to permit public vehicles to operate freely on day of by-election

- Fugitive arrested after 26 years

- Indian Potash Ltd secures contract to bring 30,000 tons of urea within 107 days

- CAN adds four players to squad for T20 series against West Indies 'A'

Leave A Comment