OR

#OPINION

The AI Age: Redefining Politics, Democracy, and Society on Social Media

Published On: June 20, 2024 08:40 AM NPT By: Mahesh Kushwaha

Mahesh Kushwaha

The author is Research Fellow at Centre for Social Innovation and Foreign Policy (CESIF).news@myrepublica.com

AI systems and algorithms that personalize content for us on social media, based on our past consumption patterns

A Balen supporter, for instance, is likely to only see filtered content about his good deeds and consume opinions and perspectives that portray him as a hero, a savior. In contrast, Balen's critics are continuously exposed to only his controversial actions and policy choices that taint his image. As a result, we find a society that is divided between two extreme opinions of the same leader, with little room or willingness for convergence.

I doubt it would be an exaggeration to claim that almost every one of us has been at either the giving or the receiving end of those corny and obnoxious “Good Morning” or “Best Wishes” messages forwarded via Facebook or WhatsApp. While a gradual acculturation to ‘social media norms’ may have instilled the decency desired in cross-generational exchanges, no credible indicator signals any decline in the overall consumption of multimedia content online. A comically common occurrence is parents reprimanding their kids for using mobile phones too late at night while they themselves scroll hours on TikTok and Facebook videos. The younger generation stays glued to their screen on Instagram reels, extraordinarily curated by algorithms to fit their personality, mood, and an intrinsic need for validation by sharing them among their social circles online. Behind the seemingly harmless digital consumption, however, the advent of Artificial Intelligence (AI) in social media platforms’ internal working and the proliferation of AI-generated content on these platforms present serious concerns for Nepali society and politics, which require critical reflection.

Before delving into the implications of AI on democracy and politics, let’s first discuss how AI algorithms shape and sometimes dictate our lifestyles today, be it through online shopping, a simple Google search, or social media platforms such as Facebook, Instagram, TikTok, and YouTube. Most digital platforms, particularly social media, are notorious for their addictive design. These sites use AI algorithms to create a personalized content feed for each individual that can keep them hooked to the platforms for the longest time. To personalize each feed, Facebook uses four steps–creating an inventory of content from posts shared by friends and pages we follow, sorting them based on hundreds of thousands of signals, such as prior engagement patterns, social network interaction, and relevance, predicting what content an individual is more likely to engage with, and developing a relevancy score for each content. YouTube’s recommender algorithm considers factors such as clicks, watch time, sharing, likes, and dislikes to sort videos and curate a personalized feed for each user. TikTok’s highly-coveted recommender system uses non-stationary training data to keep up with users’ changing interests and moods and recommend remarkably relevant content. Even Google Search and job portals use highly personalized recommendation filters to suggest the ‘most relevant’ items and content to individuals. Netflix-like streaming platforms also use sophisticated recommender systems to rank and suggest personalized content most likely to be ‘consumed’ by each user.

While the AI-powered recommender models may curate highly accurate and useful content for a user, the implications of such filtering are manyfold. For instance, our digital footprint and prior inquiries inform subsequent searches on Google; searching jobs on an online portal leads us to vacancies that its recommender system decides we are fit for; watching a crime thriller on Netflix feeds us more thriller content; watching cute puppies’ videos on YouTube exposes us to even more of such videos, and reading an article about Balen or Rabi Lamichhane leads us to more such content. Although new AI tools are being deployed to minimize the technological limitations of the AI-enabled recommender systems that reinforce biases, perpetuate discrimination, and deprive equal access to information for all, they fall short in mitigating the socio-political consequences of the digital consumption patterns, which are particularly grave for lesser developed countries with little AI literacy and a significant digital divide.

A common phenomenon that the consumption of highly personalized social media content gives rise to is that of filter bubbles. AI systems and algorithms that personalize content for us on social media, based on our past consumption patterns, filter out the content that they determine would be irrelevant to us. Instead, they feed us with content that we like or are more likely to agree with, creating a state of intellectual isolation where we are overexposed to certain ideas and perspectives. The resulting insulation to diverse perspectives and varying information sources often puts us in “echo chambers,” distorting our perception of reality. This phenomenon results in societal and political polarization, with little room for healthy discussions, which has been quite common in Nepal recently. For instance, the U.S.-funded Millennium Challenge Corporation (MCC) generated fierce debates in Nepal, polarizing the entire country into supporting and opposing blocks. On domestic political issues and leaders, too, the civic and digital media spaces appear increasingly polarized, with extreme views on either side of the spectrum. Balen, Rabi Lamichhane, Harka Sampang, monarchy, secularism, federalism, and republicanism are some common topics around which we see competing filter bubbles and echo chambers, with many blind supporters and critics. A Balen supporter, for instance, is likely to only see filtered content about his good deeds and consume opinions and perspectives that portray him as a hero, a savior. In contrast, Balen's critics are continuously exposed to only his controversial actions and policy choices that taint his image. As a result, we find a society that is divided between two extreme opinions of the same leader, with little room or willingness for convergence.

What exacerbates the situation is the proliferation of mis/disinformation on social media, particularly on politically or socio-culturally sensitive topics. Tech-savvy leaders or malign actors may try to exploit the very phenomenon by launching coordinated mis/disinformation campaigns. Such campaigns often use generative content such as manipulated text, audio, images, and deep fake videos, which have been a common tactic for political parties and leaders across the globe. The Indian general election saw a significant use of deep fake videos, triggering the Election Commission to take action against the use of such AI-manipulated videos in election campaigns. In Nepal, right-wing groups have often exploited these echo chambers to entice religious and communal conflicts, particularly in the southern plains along the Nepal-India border. Social media algorithms and personalized feeds amplify the reach and intensity of the incidents, quickly expanding them across cities.

AI’s utility in today’s digital world continues to grow, with almost no aspect of human life untouched by transformative innovation. It has been helping transcend human limitations to take incredible leaps in the fields of medicine, finance, education, manufacturing, and so on. However, its impact on society and politics through digital platforms remains a cause of serious concern for countries across the globe. As discussed above, AI tools and algorithms pose a significant threat to democratic principles and societal harmony, not just implicitly but directly. In the year 2024, when over 40% of the world’s total population is voting, governments and leaders have been frantically trying to minimize its negative impacts on democracy and elections–through different policies, executive orders, and regulatory frameworks. The EU has been a pioneer in this endeavor, passing its comprehensive AI Act and offering lessons for countries worldwide. For the digitally divided Nepal, with very low AI literacy, AI-induced digital innovation is a double-edged sword whose maximum potential can be harvested only through a robust private-public collaboration that promotes AI literacy and takes preemptive regulatory measures without depriving the country of the immense positive growth that AI can generate.

You May Like This

Hijacking democracy

Algorithms will soon be a big part of human society. It is already being used to determine what people see... Read More...

How AI can promote social good

BOSTON – Artificial intelligence is now increasingly present in corporate and government decision-making. And although AI tools are still largely... Read More...

How algorithms (secretly) run the world

When you browse online for a new pair of shoes, pick a movie to stream on Netflix or apply for... Read More...

Just In

- No best employee award for eighth consecutive year

- NAC blames govt and CAAN for failure to generate profit

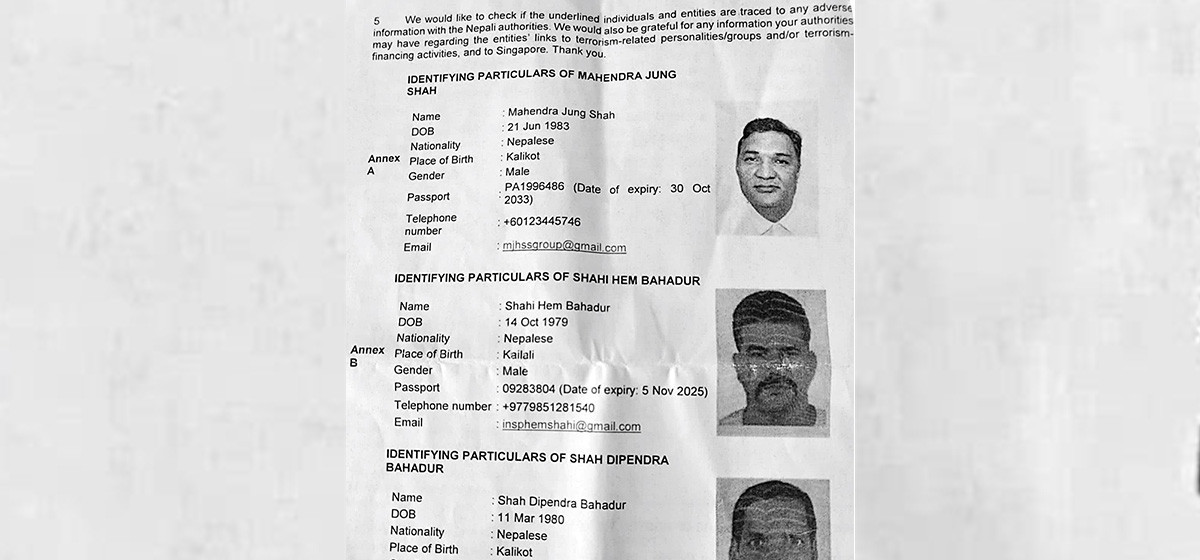

- ‘Bribe-seeking’ DSP Shahi suspected of involvement in terrorist activities

- Foreign companies took over Rs 150 billion in dividends from Nepal in eight years

- PM Oli confers awards on 40 civil servants

- Germany to provide Rs 7.5 billion in grant to Nepal

- PM Oli says no immediate prospects for salary increment for civil servants

- Cyclist dies in motorcycle collision in Nawalparasi

Leave A Comment